LeCun's I-JEPA, LLM for Streaming Applications, and Automatically Tuned Gradient Descent

PandaScore Research Insights #12

I-JEPA

What it is about: In April, Yann LeCun's team at Meta published a paper introducing the Image-Joint Embedding Predictive Architecture (I-JEPA). This approach facilitates the learning of semantically rich image representations without relying on manually crafted data augmentations.

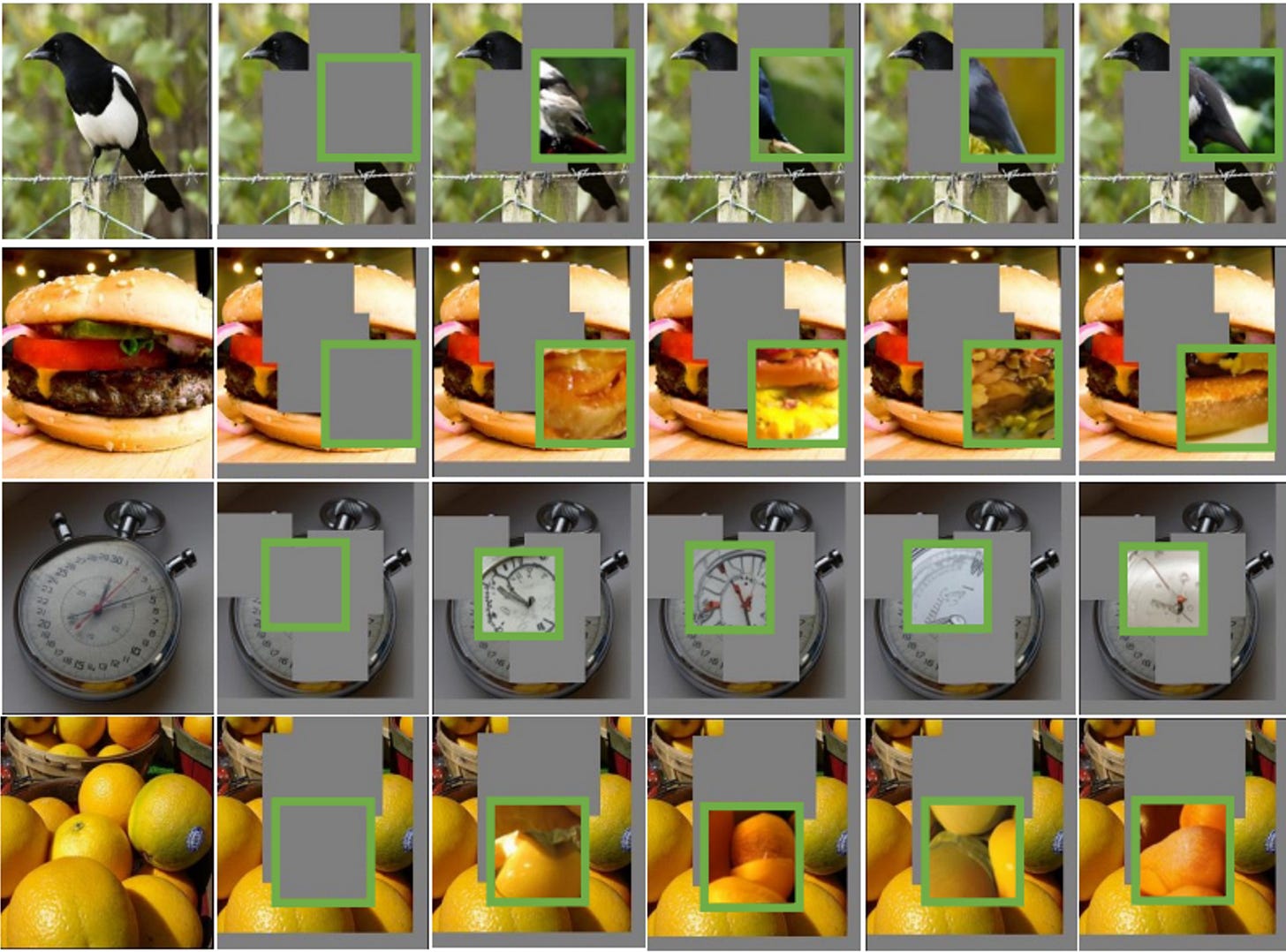

How it works: I-JEPA reconstructs masked portions of images, but uniquely operates in the embedding space rather than the pixel space. Resembling generative architectures, it surpasses them by acquiring more nuanced semantic features. For instance, in the reconstruction of an animal part, I-JEPA identifies the missing body part, prioritizing essential features over the exact pixels necessary for reconstruction. This semantic understanding, typically achieved by joint-embedding architectures with hand-crafted data augmentations, is achieved effortlessly by I-JEPA, eliminating the need for expert knowledge and meticulous tuning.

In detail, I-JEPA employs a single context block (a random crop from the original image) to predict representations of various target blocks within the same image. The architecture uses vision transformers as both encoders and predictors. An L2 loss is applied to the predicted and target patch representations during training.

Results: Once trained, the I-JEPA model can serve as pre-training for downstream tasks. The paper demonstrates its application in various fields, including low-shot ImageNet classification, object counting, and depth prediction. I-JEPA outperforms models that do not use data augmentations and rivals the best models that do. Additionally, it scales more efficiently, requiring fewer parameters and less training time.

Why it matters: While data-augmentation is a super useful technique to help the model generalize, it often needs expert knowledge and careful engineering to be effective. This paper presents a way to remove this constraint. Furthermore, this is the first paper that follows Yann LeCun’s view on how to make machines learn as efficiently as humans (head over to our previous post if you want to learn more about it).

Our takeaways: At PandaScore, our computer vision models heavily rely on data augmentation to adapt to frequent data shifts, such as new HUDs in video streams. However, this approach is maintenance-intensive. The I-JEPA method offers a potential solution to this challenge. Also, we were pleased to see successful scaling models down experiments. Indeed, not all real-world application need very big models!

Efficient Streaming Language Models with Attention Sinks

What it is about: In November, MIT and Meta introduced StreamingLLM, aiming to optimize the efficiency of Large Language Models (LLMs) in streaming applications. Streaming applications for LLMs involve processing ongoing and potentially limitless input streams, such as continuous dialogue or real-time text analysis. This demands the model to handle lengthy interaction sequences with efficiency.

How it works: Transformer models, the foundation of many LLMs, incorporate Keys and Values in their attention mechanism, helping in determining the model's focus on different parts of the input data. In the context of streaming applications characterized by prolonged interactions, a major challenge is the substantial memory requirements when caching all past tokens. To address this, StreamingLLM employs an attention sink mechanism: it retains only the first and the most recent Key and Value states. The rationale behind the attention sink lies in the tendency of LLMs to allocate significant attention to initial tokens, irrespective of their relevance. This occurs because the Softmax operation in LLMs necessitates attention scores to sum up to one across all contextual tokens. Consequently, even if current tokens don't strongly match many previous tokens, the model assigns unnecessary attention values to balance the sum. Initial tokens serve as natural "attention sinks" as they are visible to almost all subsequent tokens, accumulating unnecessary attention and stabilizing the attention distribution.

Results: StreamingLLM demonstrates a remarkable 22.2× speedup and effectively models texts up to 4 million tokens, showcasing superior efficiency and performance. In handling extended sequences, it outperforms dense attention, matches both the speed of window attention, and equals the performance of sliding window with re-computation (as depicted in the figure above).

Why it matters: Efficiently processing lengthy text sequences is crucial for real-world LLM applications, impacting various sectors from customer support to content generation.

Our takeaways: We were quite surprised about the importance of the first token compared to the other ones. While the property is well leveraged in the context of streaming application, it may unveil a hidden weakness in current LLM models, warranting further investigation in the future.

Gradient Descent: The Ultimate Optimizer

What it is about: This NeurIPS2022 article, authored by research scientists from Meta and Stanford, delves into the optimization of optimizers.

How it works: The main contribution of this work is to provide a general way to automatically optimize the parameters of the optimizer (hyperparameters) of a neural network. Their general approach can be applied to various back-propagation based optimizers. It is also possible to stack multiple optimizers easily with this framework.

More specifically, it works with a simple tweak in the writing of the neural network graph. With this re-writing, it is possible to back-propagate errors into the hyperparameter variables itself in order to optimize it automatically. Like with modern Deep Neural Network libraries, the differentiation is automatic and the only thing to do is to set the hyper-hyperparameters responsible for the optimization of the hyperparameters.

Results: First, their method of automatically tuning the optimizers generally outperforms the classic Stochastic Gradient Descent. For Adam, their method is still better if not a lot of effort is put into fine-tuning the Adam hyperparameters. Furthermore, on some experiments, performance with automated hyperparameters match the ones obtained by expert-engineers after hand tuning.

What they found out: With enough stacked optimizers, the starting hyperparameters values are not important. On a broader perspective, they convey the idea that wasting time with the tuning of hyperparameters by hand might be something to not be bothered with anymore.

Why it matters: While the theory about neural network optimization has been well studied, a lot of improvement remain possible regarding the setting and/or the optimizing of hyperparameters. It also matters because it could potentially reduce the hassle of looking for good set of hyperparameters.

Our takeaways: Overall we appreciate when such subject is tackled with easy and well rounded ideas. Optimization paper are generally about limits, speed and convergence rate. But here, we have a deep yet practical paper proposing a very simple to execute contribution while dealing with complex and arid subject.